Much of the search engine optimization advice found online and at conferences centers around keywords and links. A lot of the spotlight on internet marketing has focused on content and social media. There’s no question that we’ve been a strong proponent of these tactics, sharing many, many posts on them.

Much of the search engine optimization advice found online and at conferences centers around keywords and links. A lot of the spotlight on internet marketing has focused on content and social media. There’s no question that we’ve been a strong proponent of these tactics, sharing many, many posts on them.

Content and link based SEO relies on the search engine crawlers to find web pages and digital assets on their own. Search engines are far from perfect at this, so the opportunity to provide search engines with structured lists of content via feed, can provide some companies with a competitive advantage.

At SES New York I moderated a session on Pushing Feeds and XML with Brian Ussery, Amanda Watlington and Daron Babin. Much of what we share on SEO is focused on content and links so I thought I’d share some of the rich insights offered in this very useful session by asking the panel a few follow up questions:

How much of an advantage can supplying a XML feed offer a site for indexing and search visibility?

Brian: It’s really difficult to quantify in terms of a percentage but, I’d say the larger your site and more images you have the better it is to provide an XML Sitemap.

How important is it for a new site to supply (or make available) a XML sitemap for search engines?

Brian: Sitemaps are one of the best ways I can think of to let engines know about your new pages and images.

Amanda: A new site has no inbound links hence there is no way for a search engine to find the site. A Sitemap not only provide a point of departure for the crawling of a new site, by putting one together a site owner can include the most important pages. This is particularly useful if the site is quite large. The Sitemap can cue to spider to pages that in fact link much deeper into the site. This jump starts the indexing process.

Would you ever advise a company NOT to use sitemaps?

Amanda: There are very rare occasions where I would not use a Sitemap. Those instances are when the contents of the site are very problematic. When there are lots of duplicate content issues that are in the process of being resolved, it makes little sense to urge the search engines to grab a Sitemap that will only bring them to the site’s woes even faster. Once the issues are settled then the Sitemap becomes an important weapon in the SEO’s arsenal.

Brian: If you have pages that you don’t want indexed, it’s probably best not to include those URLs in your XML Sitemap. Other than those kinds of situations though, I’d say Sitemaps are the way to go. I would suggest however, not including XML meta data in Sitemaps unless it’s accurate, correct and up to date.

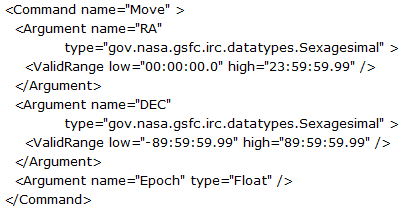

It was interesting to learn during our session, the variety of feed types that could be used from those associated with web pages to news to video and I recall one for NASA? Do you have any examples that have called for unusual solutions or use of feeds?

Amanda: Structured data such as the Sitemap is fascinating in that it provides so many opportunities to communicate data information in a machine-readable format.

There are two feed types that we did not discuss during the session. First, there are product feeds, such as those used by Google Base and other comparative shopping search engines. These allow merchants to draw product information quickly and efficiently from their databases and submit it to a shopping engine. Once formatted, a site owner can submit thousands of products with little or no intervention.

The second type of feed was just announced this past week. It is now possible to submit image information (to Google). This has been long awaited. I’ve not yet had a chance to use this, but I have been eagerly awaiting image Sitemaps.

Either way, it’s kind of interesting to see! You can find out more at NASA.

How important is the protocol Pubsubhubbub being promoted by Google? Does it replace the need for autodiscovery?

Brian: Great question Lee, Pubsubhubbub, PuSH or hubbub for short, in case you haven’t heard is an open protocol for turning atom or RSS feeds into streams. Because it requires real feed URLs, autodiscovery isn’t really necessary. So, I don’t see Pubsubhubbub as a replacement for autodiscovery per se, but rather as a more efficient method. Some folks I’m sure will continue to use autodiscovery for their feeds but I think PuSH provides additional advantages that will be favored by most.

Is there a threshold for how many pages/items should be included in a sitemap feed or how often data is updated to determine whether providing a sitemap is worth it?

Amanda: It is difficult to give an across the board threshold for when and how much to include. With retailers, we clearly must look at their seasonality and time the Sitemap submissions to be sure that any new products or category level pages have been spidered and indexed prior to the season’s start. How much to submit is really tied to how complete is the site’s current indexing.

I personally believe that it is possible to strategically manage the process making sure that key pages which direct to deeper pages are included. As I mentioned in the session, Sitemaps are not a blunt object, a club, to be used to batter one’s way into the index, rather they provide a method for strategically informing the search engines of what you want found. I love to fish, salt or fresh water, and I think of Sitemaps with a fishing metaphor. They are bait.

Whether you submit a Sitemap or not, it is important to have a Webmaster Tools account. The Google team that is responsible for developing this resource continues to make it a much richer and more informative. Today, I consider it a powerful resource for knowing just what the most powerful engine is seeing in a site.